I look forward to talking to AECT colleagues on Thursday, 11/9/17 about accommodating crowdsourced data in social research and program

evaluation. This conversation complements my future research agenda on using specialized crowds for e-learning evaluation.

|

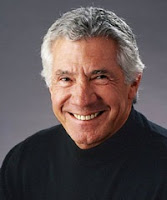

| Steve King aka Dr. Security |

For issues about managing data and cybersecurity, I typically

turn to the expertise of Steve King, Netswitch

COO/CTO. Learn more about Steve and read

what I learned about security and engaging crowds in e-learning evaluation.

Steve is happy to advise evaluators on issues of architectural requirements and

cloud data security. Reach out to him on Twitter and LinkedIn @sking1145.

Platform

Considerations for Human Intelligence Tasks

A secured Web conferencing platform or a MOOC separate from

a school’s network should support crowdsourced evaluation activities. A secure

web-conferencing platform that imposes a bunch of restrictions is necessary to

assure there is no easy avenue to compromise crowd evaluation tasks.

Assuming the crowd will be operating on an external host

(cloud provider like AWS or Azure), the platform ought to be able to offer

several services. Cloud providers typically offer content monitoring services

so that up and downloads can be erased/destroyed at discretion. They also offer

meta-data backup of the event itself so key information can be retained without

leaving the crowd initiator or evaluator vulnerable to actual content being

compromised.

Data Processing

Considerations

The cloud service provider should be able to process large

volumes of high velocity, structured (spreadsheets, databases) and unstructured

(videos, images, audio) information as well as secure all of it. Even if an

evaluator choses to run evaluation tasks in a local private cloud, any provider

will offer this level of security.

But it is CRUCIAL to separate the cloud from the school’s network

so that the crowd members can’t gain access to the school’s information or

administrative network. If an evaluator can only run off the school’s

system/network itself, she will be forced to set-up a sub-net at least and buy

some hardware which could get more expensive than running the whole thing in a

cloud. I would NOT advise an evaluator to try the latter option.

Credentials

Considerations

In order to have a clearly defined crowd, crowd initiators

must assign every member a credential and use whatever vetting process the

evaluator would normally use to determine their appropriateness for

participation. A SSN or EIN number matched with key locator information or

student ID that can be validated could work.

Other Key Platform,

Data, and Credential Considerations:

The Federal Risk and Authorization Management Program

(FedRAMP), while specifically related to government security requirements, provides

compliance guidelines that will guarantee a secure web conferencing platform.

FedRAMP standards meet the baseline security controls set

out by the National Institute of Standards.

A web conferencing platform should be FedRAMP compliant, and

if not, should employ a layered security model of some sort with the following

characteristics:

·

Gated

access is one important characteristic.

Gated access refers to the security

options that manage entrance to and usage of virtual rooms employed by a web

conferencing platform. Gated access also

helps prevent DDoS attacks

·

Platform, data, and credential restriction settings are important.

The ability to set restrictions on the hours a

virtual room can be accessed minimizes the time in which sensitive and vulnerable

information can be viewed and compromised. Crowdsource HITs initiators will

want to be able to monitor remote users. The platform should allow the encryption

of all information in transit. It should also allow session locks and the

ability to terminate credentials so the crowdsource initiator can manage the

platform access. Session locks allow evaluators to control who can enter a room

at what time. Credential termination should be both manual and automatic based

on a member leaving a room or meeting space where their credentials will no

longer work for re-entry without a new login.

The platform should also let

evaluators encrypt the event recordings, both at rest and in transit so that

when a crowd initiator shares the contents with evaluation members unable to

participate synchronously, only authorized members will have the encryption

keys that will allow them access.

Initiators will want to be able to

control and define roles and access privileges for the crowd which will

establish the specific conditions by which a member can interact with a group

or room. Role-based access control and dynamic privilege management are keys to

this next layer. It is the evaluator’s ultimate control over who gets to enter

which rooms, so she can decide that a member who needs to share information

with a key group but should not be allowed direct access to that group can be

assigned to a sub-conference room where a primary conference member can meet

them, gain the information and return to the primary meeting.

Dynamic privilege management allows

an evaluator to enable the retention of a member’s virtual identity while suspending

their access privileges, so a member could have their privileges upgraded

temporarily for a one-time event and then returned to their prior status. This

could also facilitate evaluation requirements for working on individual or

group e-learning tasks, and protecting small human intelligence tasks or HITs.

The conferencing platform should

also allow a way to pair a person with unique authenticators that can customize

their privileges. This is done through

individual access codes which are essentially the member’s fingerprints.

Depending upon the privileges granted, individual access controls keep track of

access rules and determine which sessions each member will be allowed to enter.

This comes in handy for the identification of suspects following an information

leak or inappropriate sharing of sensitive material.